Node.js is often considered unsuitable for CPU-intensive applications.

The way Node.js works makes it shine when dealing with I/O-intensive tasks, which is arguably what most web servers do. But unfortunately, the same properties that make Node.js ideal for I/O tasks, also cause it to fall short when dealing with CPU-intensive tasks. Like everything else in software, that’s a trade-off.

With that being said, and while running CPU-bound tasks is definitely not Node’s core use case, there are ways to make it work. Since its inception, Node.js has made significant progress on that front, and you should now be able to run occasional CPU-bound tasks with reasonable performance.

How would you do that? bear with me! We’ll answer the following questions:

- What are CPU-bound tasks?

- Why Node.js has trouble running CPU-bound tasks?

- How to overcome these issues and effectively run CPU-bound tasks in Node.js?

If you’re not interested in a deep understanding of the topic and just looking for some worker threads code examples and explanations, you can skip to that section.

What are CPU-bound (and I/O-bound) tasks?

In case that’s the first time you’ve heard the terms CPU-bound or I/O-bound - we’ll go over them shortly. In fact, it’s quite intuitive.

A program is said to be bound by a resource if that resource is what limits the rate of progression of that program. In other words, a program is bound by a resource if it would go faster if that resource was faster.

Most programs are either CPU-bound or I/O-bound, which means their execution speed is limited by either the CPU or the I/O (= Input/Output) subsystems (the disk, the network, etc.).

Examples of CPU-bound tasks would be tasks that require heavy computations or a large number of operations, such as image processing, compression algorithms, matrix multiplication, or just really long (possibly nested) loops.

Some examples of I/O-bound tasks would be reading a file from the disk, making a network request, or querying the database.

As we’ll soon see, Node.js, due to its architecture and execution model, does a fantastic job when dealing with I/O-bound tasks, but tends to have some problems with CPU-bound tasks.

Why are CPU-bound tasks problematic in Node.js?

To understand why Node.js has trouble running CPU-bound tasks, we need to take a step back to discuss its inner workings. The reasons are rooted in the way Node.js is designed, so by looking at its architecture and execution model, we can learn why Node.js behaves the way it does in certain situations.

How Node.js works

As you’ve probably heard by now, Node.js is single-threaded in nature. It is based on an event-driven architecture and provides an API to access asynchronous non-blocking I/O. These are a lot of complicated-sounding terms, but the differentiating factor mostly comes down to how Node.js handles concurrency.

While most other programming languages and runtimes use threads to handle their concurrency, Node.js uses the event loop and non-blocking I/O for concurrency. In other words, whereas web servers written in Java, for instance, might allocate a separate thread for each incoming request (or each client), Node.js will handle all of the incoming requests in a single thread and use the event loop (which we’re about to discuss) to coordinate the work.

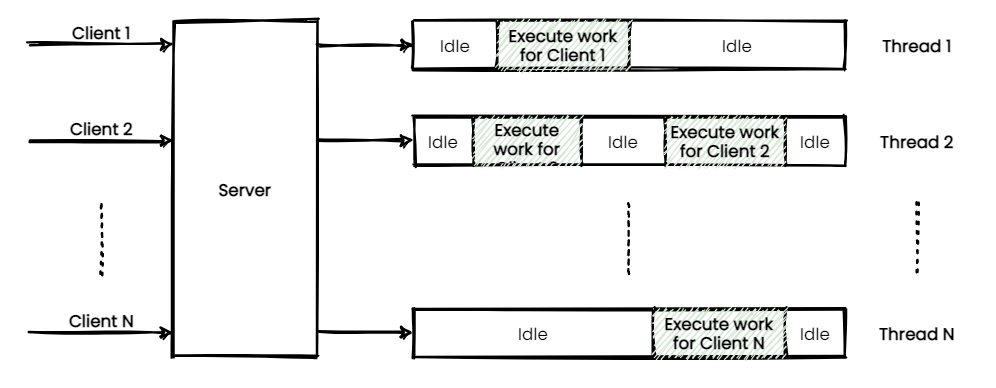

To illustrate, a classic Java server might look like this:

Note how each client gets assigned its own thread. This allows the server to handle the requests of multiple clients in parallel.

Also, note how threads are often underutilized. They sit idle when there isn’t work to perform for their particular client.

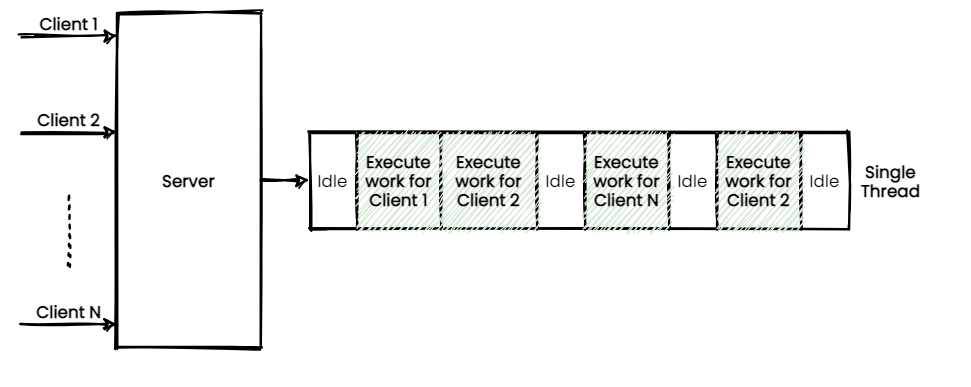

A Node.js server might look like this:

Note that here, a single thread is used to handle the requests from all the different clients. This results in much higher utilization, as the thread only sits idle when there isn’t any work to perform.

However, you can also note that in a single instance of Node.js, since there’s only a single thread executing JavaScript, the work isn’t actually performed in parallel.

So how does Node.js achieve adequate performance with only a single thread? How can it deal with more than a single request at a time? This is where the asynchronous non-blocking I/O and the event loop come in!

Node.js can run I/O operations in a non-blocking way, meaning other code (and even other I/O operations) can be executed while an I/O operation is in progress. Put differently, instead of having to ‘wait’ for an I/O operation to complete (and essentially waste CPU cycles sitting idle), Node.js can use the time to execute other tasks.

When the I/O operation completes, it’s the event loop’s responsibility to give back control to the piece of code that is waiting for the result of that I/O operation.

To summarize, while the CPU-bound part of the code is executed synchronously in a single thread, the I/O operations are executed asynchronously, without blocking the execution in the main thread (hence the term non-blocking). The event loop is responsible for coordinating the work, and deciding what should be executed at any given time. Essentially, this is how Node.js achieves concurrency.

But there’s one question left. If Node.js is single-threaded, how can it possibly have non-blocking I/O? How can other code (and other I/O operations) be executed while an I/O operation is currently in progress?

Well, this is where things get interesting. For starters, Node.js isn’t exactly single-threaded. Node.js is indeed single-threaded in terms of its execution model, meaning only a single JavaScript instruction can run at any given moment within the same context. That, however, doesn’t mean Node.js can’t utilize additional threads to run native code behind the scenes. (Spoiler: or even spawn additional threads to run JavaScript in a separate context, but within the same process, as we’ll later see with worker threads). This is why we say Node.js is single-threaded in nature. Even though in practice multiple threads are used, the execution model of Node.js is still based on a single-threaded event loop.

Fun Fact: It’s worth noting that this single-threaded execution model is not a product of the JavaScript language itself. In fact, JavaScript is neither inherently single-threaded nor multithreaded as a language. The language specification doesn’t require either. The decision is ultimately left up to the runtime environment (Node.js or the browser, for instance).

With that in mind, let’s take a look under the hood of Node.js to better understand how non-blocking I/O is achieved.

When talking about the building blocks of Node.js, the first dependency that comes to mind is probably V8. V8 is a JavaScript engine, meaning it’s the component that actually executes JavaScript code within Node.js. V8 is open-source, written in C++, and known for being highly optimized. It’s maintained by Google and is being used as the JavaScript engine in Chrome.

The second, and arguably more interesting dependency of Node.js, is libuv. libuv is a native, open-source library that provides Node.js with its asynchronous, non-blocking I/O.

libuv also implements the Node.js event loop.

Basically, the operating systems Node.js runs on already provide non-blocking variants for most I/O operations, but each operating system uses a slightly different mechanism to achieve it. Even within the same operating system, some I/O operations are different from others, and some don’t even have a non-blocking variant.

To combat all these inconsistencies, libuv provides an abstraction around non-blocking I/O. It unifies the non-blocking behavior across different operating systems and I/O operations, thus allowing Node.js to be compatible with all major operating systems. libuv also manages an internal thread pool for offloading I/O operations for which no non-blocking variant exists at the operating system level. Interestingly, it also utilizes this thread pool to achieve non-blocking behavior for some common CPU-intensive operations, such as the crypto and zlib modules (which are used for hashing and compression respectively, operations that are quite common in web servers).

Now that we understand how Node.js works and how it’s built under the hood, we should be able to understand why running CPU-bound tasks in Node.js isn’t trivial in terms of performance. Node.js uses a single thread to handle many clients, and while I/O operations can run asynchronously, CPU-intensive code can not. It means that too many (or too long) CPU-intensive tasks could keep the main thread too busy to handle other requests, practically blocking it.

The Node.js execution model was designed to cater to the needs of most web servers, which tend to be I/O-intensive. To achieve that, it sacrificed its ability to run CPU-intensive code performantly. This tradeoff proved to be successful and contributed immensely to Node.js popularity.

But, in order to benefit from this tradeoff, we need to play by the rules of Node.js. The most important of them being Don’t Block the Event Loop. It basically means that we should always keep the work associated with each client at any given time to a minimum. In other words, we need to make sure each callback completes quickly. The way to do so, as you might have guessed, is to minimize the CPU-intensive stuff.

Remember that we talked about how in addition to I/O operations, libuv utilizes its internal thread pool to run some common CPU-intensive operations, such as the

cryptoandzlibmodules, in a non-blocking manner? This is Node.js’s way of letting us use these popular CPU-intensive operations while still adhering to its rules and not blocking the event loop.

However, as mentioned earlier, while running CPU-bound tasks isn’t the core use-case of Node.js - Node.js does provide the means to do so reasonably well. These means have evolved over the years of Node.js existence, and recently reached a point where you should be able to run CPU-intensive tasks easily and with minimal overhead. The performance obviously won’t match runtimes that were specifically designed for performing this kind of work, and if your service is truly CPU-intensive then you should probably use the right tool for the job™ and look into options other than Node.js. With that being said, Node.js is now perfectly adequate for the occasional CPU-bound tasks.

When in doubt, avoid speculations. Profile your application to get the actual answers.

How to run CPU-bound tasks in Node.js?

Now that we’ve gained some insight into why running CPU-intensive tasks in Node.js can be challenging, let’s get our hands dirty with an example. We’ll examine how CPU-bound tasks behave in the context of a Node.js application, identify the challenges, and apply the relevant techniques from the Node.js toolbox to overcome them.

For our example, let’s choose a computationally expensive problem to challenge ourselves with. One that might take long enough to meaningfully block the event loop, and would therefore allow us to get a feel of the issues involved with running CPU-intensive code in Node.js.

The Fibonacci sequence and time complexity

A perfect example of such a problem would be calculating the Fibonacci number at a specific position in the Fibonacci sequence.

The Fibonacci sequence is defined as a sequence of numbers starting with 0 and 1, with each subsequent number being the sum of the two previous numbers in the sequence.

To illustrate, the beginning of the Fibonacci sequence is:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233..

The Fibonacci sequence can therefore be formally defined in the following manner:

The first two lines tell us that the first two items of the sequence are 0 and 1, and the third line demonstrates that in order to calculate the n-th item in the sequence, we should sum the two previous items (n-1 and n-2).

What makes the Fibonacci sequence a perfect example for our purpose, is that the simplest way to calculate the Fibonacci number at a given position in the sequence is the recursive solution, which has a time complexity of O(2n). This means it grows exponentially with the input size (n). In other words, this algorithm can get really slow to execute very quickly for increasing values of n.

If you need some help understanding the concept of measuring the runtime complexity of algorithms with the big-O notation, I highly recommend watching this great video. Nevertheless, it’s not required for our purpose.

This recursive solution can be written in the following way:

1function fibonacci(num) {2 if (num <= 1) return num;3 return fibonacci(num - 1) + fibonacci(num - 2);4}

There are obviously much more efficient ways to solve this problem. The recursive solution is the simplest, but also the most computationally expensive. We intentionally use it to learn more about slow, CPU-intensive tasks in Node.js.

Running it on a web server

Now, let’s say we want to create an http server that will generate Fibonacci numbers upon request. Our server, like most other web servers, should be able to serve multiple concurrent clients. In addition to generating Fibonacci numbers, our server should also respond to any other request with Hello World!.

To achieve these requirements, our server needs an endpoint that will accept requests in the format /fibonacci/?n=<Number> and respond with the n-th item in the Fibonacci sequence. Requests to any other path should simply respond with Hello World! as long as the server is alive and responsive.

Let’s get to it and start implementing. First, create a new directory for our new server. In that directory, create a new file named fibonacci.js. In this file, we will implement our fibonacci function and export it, so it can be used by other modules:

1function fibonacci(num) {2 if (num <= 1) return num;3 return fibonacci(num - 1) + fibonacci(num - 2);4}56module.exports = fibonacci;

Now, it’s time to implement an http server, so we can expose our fibonacci functionality over the network. To implement our server, we’ll use Node’s built-in http module.

Let’s start by implementing the simplest possible server in a new module named index.js:

1const http = require('http');23const port = 3000;45http6 .createServer((req, res) => {7 const url = new URL(req.url, `http://${req.headers.host}`);8 console.log('Incoming request to:', url.pathname);910 res.writeHead(200);11 return res.end('Hello World!');12 })13 .listen(port, () => console.log(`Listening on port ${port}...`));

Our server should now respond to any incoming request with Hello World! and status code 200. It should also log a message with the path to which the request was made.

Let’s fire it up to make sure it works as expected:

1$ node index.js2Listening on port 3000...

Looks like our server fired up properly. Time for our first request! We can simply enter http://localhost:3000/ in our browser, or use a tool like curl to send a GET request to our server. In a separate terminal window:

1$ curl --get "http://localhost:3000/"2Hello World!

Nice! Our server is working and responds to incoming requests. Now let’s get it to do what we actually want it to: generate Fibonacci numbers!

To do that, we’ll first import the fibonacci function that we’ve exported from fibonacci.js into our server module. We’ll then modify our server so that incoming requests made to the path /fibonacci (in the format /fibonacci/?n=<Number>) will extract the provided argument (n), run our imported fibonacci function to generate the relevant Fibonacci number, and return it as a response to that request. By the end, our index.js module should look like this (changed lines are highlighted):

1const http = require('http');2const fibonacci = require('./fibonacci');34const port = 3000;56http7 .createServer((req, res) => {8 const url = new URL(req.url, `http://${req.headers.host}`);9 console.log('Incoming request to:', url.pathname);1011 if (url.pathname === '/fibonacci') {12 const n = Number(url.searchParams.get('n'));13 console.log('Calculating fibonacci for', n);14 const result = fibonacci(n);15 res.writeHead(200);16 return res.end(`Result: ${result}`);17 } else {18 res.writeHead(200);19 return res.end('Hello World!');20 }21 })22 .listen(port, () => console.log(`Listening on port ${port}...`));

Trying it out

Our server now supposedly fulfills all its requirements. Requests to path /fibonacci generate the Fibonacci number in position n (as provided in the request) and return the result. Requests to any other path simply respond with Hello World!. Seems perfect, right? Let’s run our server and try it out:

1$ node index.js2Listening on port 3000...

First, let’s make sure didn’t break the previous functionality, where Hello World! is returned for any request:

1$ curl --get "http://localhost:3000/"2Hello World!

Works as expected! great. Now let’s move on to test our server’s newly implemented functionality: generating Fibonacci numbers! As you may recall, these requests should look like this: /fibonacci/?n=<Number>. Let’s use curl to try it out:

1$ curl --get "http://localhost:3000/fibonacci?n=13"2Result: 233

As expected, our server responded with the 13th Fibonacci number, which is 233. The server responded virtually immediately, which was also expected since 13 is a small enough input. So small, that even a function with exponential complexity (like our fibonacci function implementation) could handle it pretty quickly.

But what about larger inputs? The execution time of functions with exponential time complexity can grow wildly with a slight increase in the input size. Let’s try n=46, for instance:

1$ curl --get "http://localhost:3000/fibonacci?n=46"2# About 21 seconds later...3Result: 1836311903

The execution time obviously depends on the hardware, but my laptop took no less than 21.384 seconds(!) to return the result. To illustrate how fast the growth is, calculating the 36th number took merely 202 milliseconds! That’s a huge difference.

With that being said, we knew that calculating large inputs would take a long time. We’ve intentionally implemented a not-so-efficient algorithm (to say the least), so long execution times were pretty much expected.

Where it fails

But what happens when our server has to deal with multiple concurrent requests? Let’s try it out and see for ourselves.

We’ll start with a request to calculate the 46th Fibonacci number, which as we witnessed earlier, should take about 21 seconds:

1$ curl --get "http://localhost:3000/fibonacci?n=46"

Right after that, while the first request is still running, let’s make an additional request. We’ll send it from another terminal since the first one is still waiting for a response:

1$ curl --get "http://localhost:3000/"

Note that this time, our request should simply return Hello World! without doing any further processing. Previously, this type of request resulted in a virtually instant response.

But, as we can immediately notice, our server is unresponsive. The second request simply hangs, and will only be handled once the first request is done. We had to wait 21 seconds for the second request to complete, despite that all it had to do was respond with Hello World!.

Evidently, our assumptions from the previous sections check out. Running potentially long CPU-bound tasks in Node.js, without using the proper techniques results in a blocked event loop. This means all further operations are blocked until the end of the blocking operation. In our case, the server is so busy calculating the 46th Fibonacci number, that it is unable to even respond with Hello World! to the following request. This is obviously unacceptable in almost all situations.

This is also the reason this article was written. The difficulty with running CPU-intensive tasks is one of Node.js greatest drawbacks. But, as we mentioned earlier, there are some possible solutions.

Possible solutions

Now that we understand why Node.js has trouble running CPU-bound tasks, and we’ve seen how it might manifest in a real-world scenario, let’s talk about solutions.

In this section, we’ll explore the possible approaches for dealing with CPU-bound tasks in Node.js:

- Splitting up tasks with

setImmediate()(single-threaded) - Spawning a child process

- Using worker threads

Each of them has its place, and none is completely obsolete. It’s a matter of using the approach that’s right for a particular use-case! This article focuses on the worker threads approach, which happens to be the most recent approach to join the Node.js toolbox. It’s also arguably the most powerful and versatile, as it can be applied in many different situations.

But before we dive into the nitty-gritty details of worker threads, let’s get an overview of the available techniques:

Splitting up tasks with setImmediate()

This approach, unlike the others, doesn’t utilize additional CPU cores. It’s not the focus of the article, so I won’t go into too much detail or provide any code examples. It’s still worth getting familiar with, nonetheless.

Looking into the essence of the issues with running CPU-bound tasks in Node.js, we can clearly see that they all stem from one fundamental truth: These long-running tasks block the event loop, keeping it busy. So busy, that it completely starves all other tasks, denying them even a single cycle of execution.

A blocked event loop means complete starvation of other tasks - not a mere slowdown. And we’ve already witnessed the results. A single long-running CPU-bound task, like calculating the 46th Fibonacci number, can utterly block the event loop, so that it can’t even complete a basic operation and respond to a request with Hello World!.

But what if we could ‘split’ these long-running, CPU-bound tasks into smaller, less time-consuming pieces, allowing other code to run in-between them?

This can, essentially, solve our issues. While the throughput doesn’t increase, splitting up the long-running task can dramatically increase the server responsiveness. No more ‘lightweight’ tasks being indefinitely blocked by some long-running, CPU-bound behemoth.

To achieve such a ‘split’, we can use the built-in setImmediate() function. By splitting our CPU-bound operation into several steps, invoking each step with setImmediate(), we basically make the event loop take control between each step. The event loop will then handle pending tasks before moving on to the next step.

This is probably the most basic approach for dealing with CPU-bound tasks in Node.js. Sadly, it has some major flaws:

- Inefficient. Each step deferred with

setImmediate()introduces some overhead. This overhead might add up and become substantial as the number of steps increases. - Only useful when there’s a single CPU-intensive task running at a time. This approach can help keep the server responsive, but it obviously won’t make multiple CPU-intensive tasks running concurrently go any faster. Remember, we’re still utilizing a single thread!

- Cumbersome. Splitting algorithms into steps isn’t always trivial. It can sometimes be difficult to implement elegantly, hence making the code less readable.

All these flaws do not mean this approach is completely useless - just that you should carefully consider whether it’s appropriate before using it. For the occasional CPU-bound task that you expect to run once in a blue moon, this approach might be the simplest and most effective way to get the job done.

Spawning a child process

The previous approach we introduced, ‘Splitting up tasks with setImmediate()’, can be seen as sort of a workaround. We’ve realized CPU-intensive tasks are blocking the event loop, so we’ve decided to ‘split them up’, allowing the event loop to handle some other tasks in-between the blocking pieces.

It helps, to some extent. But what if instead of looking for workarounds, we’ll fully embrace the nature of Node.js? What if we’ll completely refrain from running expensive CPU-bound tasks in our main application?

Instead, we can run these tasks in a separate process.

This way, we let our main application do what Node.js does best. We adhere to Node’s rules and avoid blocking the event-loop. In return for using Node.js the way it was designed to be used, our main application would benefit greatly in terms of performance.

On top of that, the task running in a separate process would also go faster. There’s no need to defer steps and run additional tasks ‘in-between’, so all of the resources assigned to the process will be dedicated to run the given task at full speed.

Sounds great, right? Turns out, Node.js has an API exactly for that! It is found in the child_process module, which as the name implies, provides the ability to create child processes, manage them, and communicate with them.

So how do we create a child process? It’s pretty simple. We pass a module path to the child_process.fork() method. This will spawn a new Node.js child process running the given module. This method returns a ChildProcess object, which comes with a built-in communication channel that allows messages to be passed between the parent and child processes.

Each spawned Node.js child process is independent and has its own memory, event-loop, and V8 instance. The only connection between the child and the parent process is the communication channel established between the two.

Let’s give it a try. We’ll make our Fibonacci server spawn a child process to run the CPU-intensive stuff, which is Fibonacci numbers generation. This will keep our server’s event-loop free.

First, create a new module named fibonacci-fork.js. This is the module our child process will run:

1function fibonacci(num) {2 if (num <= 1) return num;3 return fibonacci(num - 1) + fibonacci(num - 2);4}56process.on('message', (message) => {7 process.send(fibonacci(message));8 process.exit(0);9});

As you can see, it’s a very straightforward module. We need our child process to run the fibonacci function, so we implement it first. Then, we instruct our child process to respond to incoming messages from the parent process (using process.on('message', ...)) by running the fibonacci function and sending back the result as a message to the parent process (using process.send(...)). After that, we instruct the child process to exit (which you shouldn’t normally do, as child processes should usually be reused).

All of that, of course, will happen in an external child process. This means the server running in the parent process gets to keep its event loop free!

Next, we’ll modify our server (index.js). Instead of running the fibonacci function itself, it should:

- Create a child process using

fork()based on our newly created module (fibonacci-fork.js) - Send the child process a message with the required parameter (

n) usingchildProcess.send() - Listen to incoming messages from the child process using

childProcess.on('message', ...)- Respond to the request with the result received from the child process

This is how it should like (changed lines are highlighted):

1const http = require('http');2const path = require('path');3const { fork } = require('child_process');45const port = 3000;67http8 .createServer((req, res) => {9 const url = new URL(req.url, `http://${req.headers.host}`);10 console.log('Incoming request to:', url.pathname);1112 if (url.pathname === '/fibonacci') {13 const n = Number(url.searchParams.get('n'));14 console.log('Calculating fibonacci for', n);1516 const childProcess = fork(path.join(__dirname, 'fibonacci-fork'));1718 childProcess.on('message', (message) => {19 res.writeHead(200);20 return res.end(`Result: ${message}`);21 });2223 childProcess.send(n);24 } else {25 res.writeHead(200);26 return res.end('Hello World!');27 }28 })29 .listen(port, () => console.log(`Listening on port ${port}...`));

You can now test it out and see it in action. Let’s send the following request:

1$ curl --get "http://localhost:3000/fibonacci?n=46"

Right after that, while the first request is still running, let’s make an additional request. We’ll send it from another terminal window, since the first one is still waiting for a response:

1$ curl --get "http://localhost:3000/"

The server should stay responsive and handle the default Hello World! requests, even when a /fibonacci request is currently in progress.

In addition, and unlike the previous approach, several concurrent /fibonacci requests should also perform a lot better! This time, they will be executed in parallel, each within its own child process. This is huge! For the first time, our JavaScript code can utilize the power of multiple CPU cores.

Note that in this example, we create a new child process for each incoming request, then exit it when it’s done. You really shouldn’t do that, but rather use a worker pool to reuse existing processes. More on that in the ‘Using a worker pool’ section.

Using worker threads

We just went over the child processes approach, which seems to solve most of our issues. And indeed, this is how running CPU-bound tasks in Node.js was usually done until worker threads were introduced to Node.js.

The worker threads API reside in the worker_threads module and enables the use of threads to execute JavaScript in parallel.

Worker threads are pretty similar to child processes. Both can be used to run CPU-intensive tasks outside of the main application’s event loop, therefore not blocking it. They also achieve that in a quite similar fashion: by delegating the work to a separate Node.js ‘instance’.

There is, of course, a fundamental difference. While child processes execute the work in a whole different process, worker threads use threads to execute the work within the same process of the main application.

This key difference leads to some very nice benefits worker threads have over child processes:

Worker threads are lightweight compared to child processes. They consume less memory and start up faster. With that being said, creating worker threads is still quite expensive, and they should generally be reused.

Worker threads can share memory. In addition to the basic message passing we’ve seen in child processes, worker threads can also transfer

ArrayBufferinstances, and even shareSharedArrayBufferinstances. Both these objects are used to hold raw binary data, which is a step forward from simply passing serialized messages.The difference between

ArrayBufferandSharedArrayBuffercomes down to the distinction between transferring and sharing. Once anArrayBufferis transferred to a worker thread, the originalArrayBufferis cleared and can no longer be accessed or manipulated by the main thread or any other worker thread. Conversely, inSharedArrayBuffer, the content is actually shared, and it can be accessed and manipulated simultaneously by the main thread and any number of worker threads (in which case the synchronization has to be manually managed by the developer).

Worker threads are clearly useful. But to truly understand them, it’s important to make a distinction between worker threads in Node.js and the ‘classic’ threads you may be used to from classic threaded programming languages, like Java or C++. The two are quite different.

In Node.js, each worker thread has its own independent Node.js runtime (including its own V8 instance, event loop, etc.) with its own isolated context. This is completely different from ‘classic’ threads in other programming languages, which usually run within the same runtime and deal with the same context of the main thread.

If you’ve ever used a threaded programming language, you probably remember that by default, any thread can access and manipulate any variable at any time, even if a different thread is currently using this variable. This can happen because all of the threads operate within the same context, and can lead to some nasty errors. It’s on you, as the developer, to use the language’s synchronization mechanisms to make sure the different threads play nice together.

Worker threads, on the contrary, operate in an isolated context of their own. By default (outside of SharedArrayBuffer), they don’t share anything with the main thread (or with any other worker thread) and therefore no thread synchronization is usually needed.

This difference has both negative and positive implications (everything is a trade-off, remember?). On one hand, worker threads might be considered less ‘powerful’ than classic threads, as they’re less flexible. But on the other hand, worker threads are a lot safer than classic threads.

Generally speaking, classic multithreaded programming is considered hard and error-prone (with some errors being rather hard to detect). Worker threads, since they run in a separate context and don’t share anything by default, essentially eliminate a whole class of potential multithreading-related errors, such as race conditions and deadlocks. All while remaining flexible enough for the vast majority of use cases.

Now that we understand the rationale behind worker threads and their benefits over child processes, and can tell the difference between worker threads and ‘classic’ threads, we’re ready to proceed to write some code. Time to make our Fibonacci server use worker threads!

Before we start implementing, let’s review some of the core terms and methods in the worker_threads module, all of which can be found in the official documentation. It might be difficult to understand without enough context, so don’t get bogged down on the details. Read it and move on:

new Worker(filename, [options])- The constructor used to create new worker threads. The created worker threads will execute the contents of the module referred to byfilename. Optionally, additionaloptionscan be provided. One common option isworkerData.worker.workerData- Can be any JavaScript value. This is a clone of the data passed to this worker thread constructor using theworkerDataoption. Essentially, this is a way to provide a new worker thread with some data upon creation.worker.isMainThread- Is true if the code is not running inside a worker thread, but rather in the main thread.worker.parentPort- The worker’s end of the two-way communication channel between the worker thread and the parent thread. Messages sent from the worker thread usingparentPort.postMessage()are then available in the parent thread usingworker.on('message'). Conversely, messages sent from the parent thread usingworker.postMessage()are then available in the worker thread usingparentPort.on('message').

Again, it’s completely fine if it doesn’t make sense right away. Soon we’ll see some actual code, and things will hopefully become clearer.

Let’s begin implementing. First, we have to create a new worker module. We’ll call it fibonacci-worker.js. This module will contain the code that will ultimately be executed inside the worker threads. In addition, we’ll use this module to encapsulate the creation of workers:

1const {2 Worker,3 isMainThread,4 parentPort,5 workerData,6} = require('worker_threads');78function fibonacci(num) {9 if (num <= 1) return num;10 return fibonacci(num - 1) + fibonacci(num - 2);11}1213if (isMainThread) {14 module.exports = (n) =>15 new Promise((resolve, reject) => {16 const worker = new Worker(__filename, {17 workerData: n,18 });19 worker.on('message', resolve);20 worker.on('error', reject);21 worker.on('exit', (code) => {22 if (code !== 0) {23 reject(new Error(`Worker stopped with exit code ${code}`));24 }25 });26 });27} else {28 const result = fibonacci(workerData);29 parentPort.postMessage(result);30 process.exit(0);31}

Let’s explain what’s going on:

Lines 1-6 - Require the relevant pieces from the

worker_threadsmodule.Lines 8-11 - Declare the

fibonaccifunction.Lines 13-26 - This

ifclause will only be executed when the code is running on the main thread. This is where we encapsulate the creation of workers and wrap them in a promise.Essentially, we export a function that takes a number

nand returns a promise. Inside this promise, we create a new worker based on the current module (__filename) and pass itnasworkerData.We then proceed to make this promise react appropriately to each of the worker events. In case of a

messageevent, we resolve the promise with the result. In case oferrororexit, we reject the promise with a relevant error.Lines 28-30 - The actual code that will be executed inside the created threads. This is where we invoke the

fibonaccifunction on the relevant number (based on theworkerDatawe passed when creating the worker) and then send the result back to the main thread usingparentPort.postMessage(). After that, we instruct the worker to exit (which you shouldn’t normally do, as workers should usually be reused).

Now that we’ve implemented the worker module, all that’s left to do is to update the server module (index.js) to use it (changed lines are highlighted):

1const http = require('http');2const fibonacciWorker = require('./fibonacci-worker');34const port = 3000;56http7 .createServer(async (req, res) => {8 const url = new URL(req.url, `http://${req.headers.host}`);9 console.log('Incoming request to:', url.pathname);1011 if (url.pathname === '/fibonacci') {12 const n = Number(url.searchParams.get('n'));13 console.log('Calculating fibonacci for', n);1415 const result = await fibonacciWorker(n);16 res.writeHead(200);17 return res.end(`Result: ${result}\n`);18 } else {19 res.writeHead(200);20 return res.end('Hello World!');21 }22 })23 .listen(port, () => console.log(`Listening on port ${port}...`));

Simple as that! Since we’ve encapsulated the workers creation inside the fibonacci-worker.js module, we now have the benefit of dealing with a simple, promise-based interface!

Note that I’ve decided to use

async/await, and therefore invoked thefibonacciWorker()withawait(line 15) and declared the server’s callback functionasync(line 7). You could, of course, use the traditional.then(...)syntax.

We can now fire up the server and try it out:

1$ node index.js2Listening on port 3000...

Send the following request, which should take a while:

1$ curl --get "http://localhost:3000/fibonacci?n=46"

And while it’s still in progress, send this request from a different terminal window:

1$ curl --get "http://localhost:3000/"

As expected, the server stays responsive and responds to the second request immediately, despite having a CPU-bound task currently running (initiated by the first request). We can also send multiple /fibonacci requests and have them executed in parallel, limited by the number of available CPU cores. That’s awesome!

Note that in this example, just like we did with child processes, we create a new worker for each incoming request, and use it only once. You really shouldn’t do that, but rather use a worker pool to reuse existing workers. More on that in the ‘Using a worker pool’ section.

Using a worker pool

In the above code examples for both child processes and worker threads, we’ve created a new worker/process for each incoming request and used it only once. This shouldn’t be done in real-world applications, because:

- Creating a new worker/process is expensive. For best performance, they should be reused.

- You have no control over the number of workers/processes created. This leaves you vulnerable to DoS attacks (or just an innocent overload).

To fix that, you should use a worker pool to manage your workers/processes. You can implement one yourself, but I’d generally recommend using an existing, battle-tested implementation. A notable example would be the workerpool library, which is fairly popular and easy to use. It works with both worker threads and child processes.

Summary

Node.js has come a long way to minimize the performance impact of running CPU-bound tasks, with worker threads being the latest (and arguably greatest) addition to its toolbox.

With that being said, and while the current state is sufficient for many applications, Node.js will probably never be a perfect fit for truly CPU-intensive applications. And that’s OK. That’s just not what Node was designed to do.

We can look at all software projects as collections of trade-offs. There is no silver bullet. No software project will ever be appropriate for all situations.

Successful software projects make trade-offs that enable them to excel at their core use case, while (hopefully) leaving some room for flexibility.

And that’s exactly what Node.js does.

Node.js is outstanding at what it was designed to do (I/O-intensive applications). And while it’s not great at what it wasn’t designed to do (CPU-intensive applications) - it still gives you the flexibility and provides a reasonable approach to do that.

In the end, you should strive to use the right tool for the job™. In this case, it means deciding if Node’s ability to run CPU-bound tasks is good enough for your application, or if you should look at alternative solutions that were specifically designed with CPU-intensive applications in mind.